Can the implementation of recurrent neural networks truly revolutionise how we solve complex problems in natural language processing? A bold statement to consider is that these networks are not just another tool but a leap forward in computational linguistics. By examining the intricacies of recurrent neural networks (RNNs), one can appreciate their profound impact on modern technology, particularly within the realm of text prediction and sequence modelling.

RNNs have become pivotal in various applications, from generating coherent sentences in chatbots to enhancing speech recognition systems. For instance, when analysing large datasets such as those used in The Guardian Quick Crossword solutions or NLP projects involving dictionaries, RNNs provide an effective mechanism for understanding context and predicting subsequent words. This capability stems from their ability to maintain information over time through internal memory states, allowing them to capture dependencies between elements in sequences.

| Attribute | Details |

|---|---|

| Name | Recurrent Neural Networks |

| Type | Machine Learning Model |

| Application Areas | Natural Language Processing, Speech Recognition, Time Series Analysis |

| Notable Implementations | Google Translate, Apple Siri, Amazon Alexa |

| Reference Website | Wikipedia - Recurrent Neural Network |

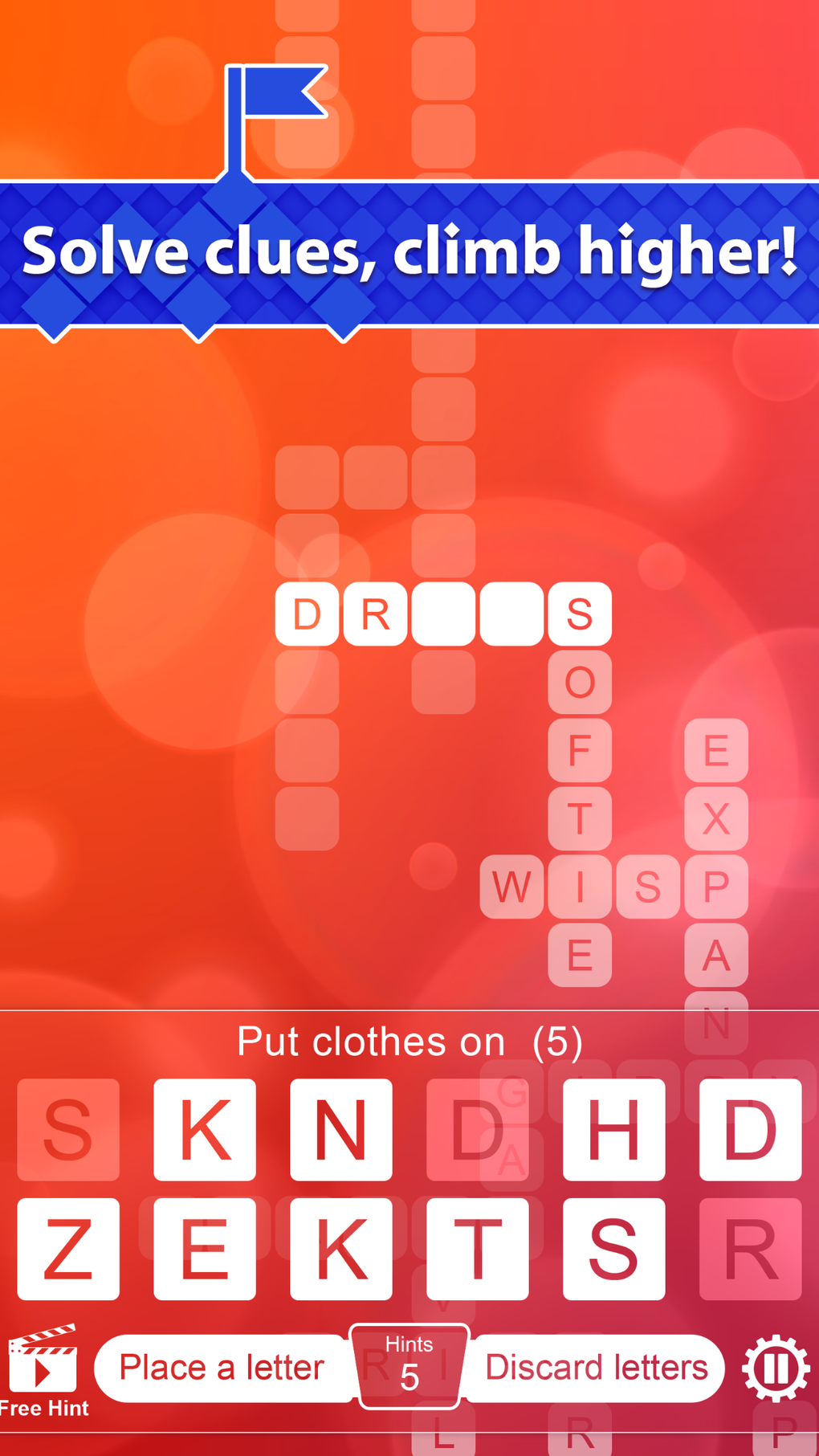

In practical terms, consider the example where an RNN processes crossword clues like Quick crossword No 17,160 from The Guardian. It learns patterns by evaluating numerous examples, eventually becoming adept at suggesting appropriate answers based on contextual cues embedded within the problem statement. Similarly, during the development phase of an NLP project focused on dictionary compilation, RNNs assist developers in identifying relationships among different word forms, thereby enriching vocabulary databases.

Data sets utilised in training these models often contain thousands—if not millions—of entries. An excerpt from one such dataset might include terms like 'quick': 1601, 'o': 1602, 'apartment': 1603, 'nasty': 1604, 'arthur': 1605 alongside more obscure references such as 'guardian': 7438, 'cheers': 7439, 'tightly': 7440, 'du': 7441, 'eh': 7442. Each entry represents a tokenised version of textual data which serves as input for the network's learning process.

When discussing specific implementations, it becomes evident that certain architectures perform better under varying conditions. Long Short-Term Memory (LSTM) units represent one popular variant designed specifically to address issues related to vanishing gradients encountered while working with traditional RNN designs. These units incorporate gating mechanisms enabling selective retention and discarding of information across timesteps.

Moreover, advancements continue shaping this field; researchers experiment with bidirectional RNNs capable of simultaneously considering past and future contexts, further improving accuracy rates. Such innovations underscore the dynamic nature of machine learning research wherein continuous refinement leads towards increasingly sophisticated algorithms tailored for diverse real-world scenarios.

As illustrated earlier, integrating RNNs into existing workflows offers tangible benefits ranging from enhanced efficiency in automated tasks to improved user experiences delivered via interactive platforms powered by AI technologies. However, challenges remain concerning computational costs associated with scaling up operations along with ethical considerations surrounding privacy implications tied to extensive data collection practices necessary for robust model training.

Despite these hurdles, optimism prevails regarding future developments within this domain. As hardware capabilities improve alongside algorithmic breakthroughs, opportunities abound for expanding applications beyond current boundaries. Imagine self-driving cars equipped with advanced dialogue systems employing RNN-based natural language processors facilitating seamless communication between drivers and vehicles—a scenario once relegated solely to science fiction now edges closer to reality thanks largely due to progress made possible by pioneering work conducted around recurrent neural networks today.

Furthermore, collaborations spanning academia, industry, and government entities foster environments conducive to fostering innovation in artificial intelligence disciplines including those centred upon recurrent neural networks. Joint ventures aimed at addressing societal needs through technological means exemplify how collective efforts yield positive outcomes benefiting society at large. Initiatives promoting open-source software development coupled with accessible educational resources empower aspiring professionals worldwide to contribute meaningfully toward advancing knowledge frontiers.

Ultimately, what began as theoretical constructs conceived decades ago has evolved into powerful tools transforming everyday life. From aiding journalists crafting compelling narratives using predictive typing features integrated into word processors to empowering educators delivering personalised learning pathways customised according to individual student requirements—all owe much to foundational principles laid down originally exploring possibilities offered by simple yet elegant mathematical formulations describing temporal dynamics inherent within sequential data structures.

Thus, embracing recurrent neural networks entails acknowledging both potential rewards alongside inevitable risks accompanying rapid technological advancement. Striking balance requires vigilant oversight ensuring responsible deployment aligns closely with core values prioritising human welfare above all else. Only then can true progress unfold sustainably benefitting generations to come without compromising integrity essential preserving trustworthiness central maintaining public confidence supporting ongoing endeavours pursuing scientific discovery fuelled passion unlocking mysteries universe holds waiting reveal curious minds daring venture unknown territories charted paths lead discovery enlightenment.